Task level metrics

Accuracy Metrics

Task-level accuracy metrics benchmark how closely the Centaur-generated labels for a task match the labels provided by a customer team or an admin. Therefore, all accuracy metrics look at only Gold Standard cases (cases where we know an admin or customer team has provided a Correct Label) and compare the Correct Label, and the Majority Label, provided by the Centaur network.

View these accuracy metrics from your task's Analysis tab.

General Metrics

For all tasks, we show the label distribution, labeling rate, and confusion matrix.

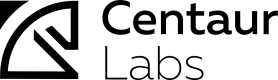

Confusion matrix

The confusion matrix analyzes the number of cases where the Majority Label matches or does not match the Correct Label for Gold Standard cases with reads.

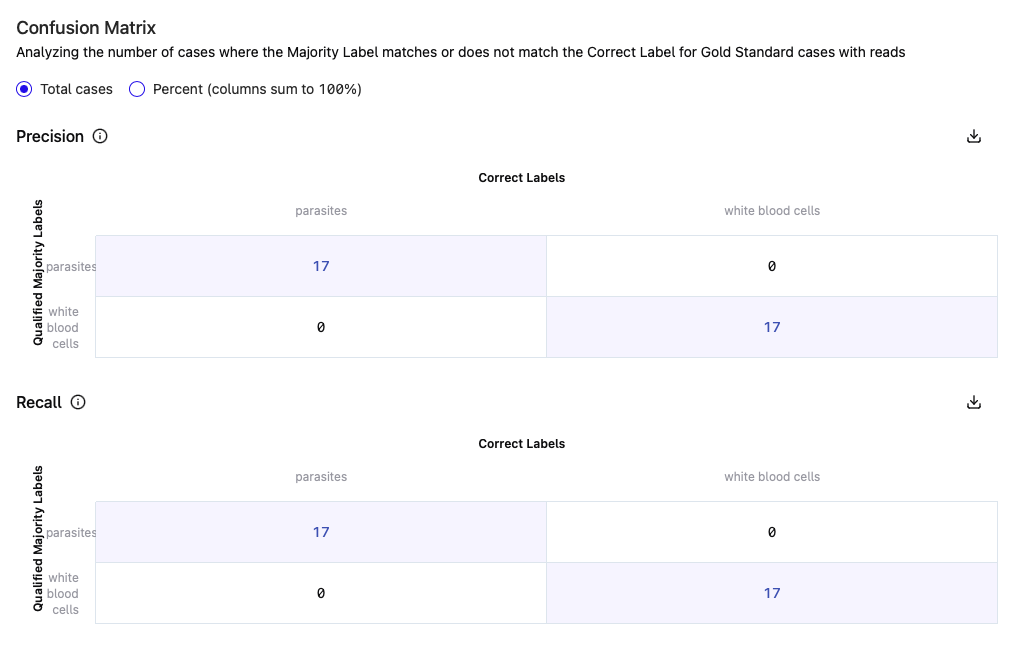

Label distribution

The label distribution bar graph shows the breakdown of Gold Standard cases by class versus Labeled cases. The label distribution graph can be used to assess whether the class breakdown is consistent with your expectations if known.

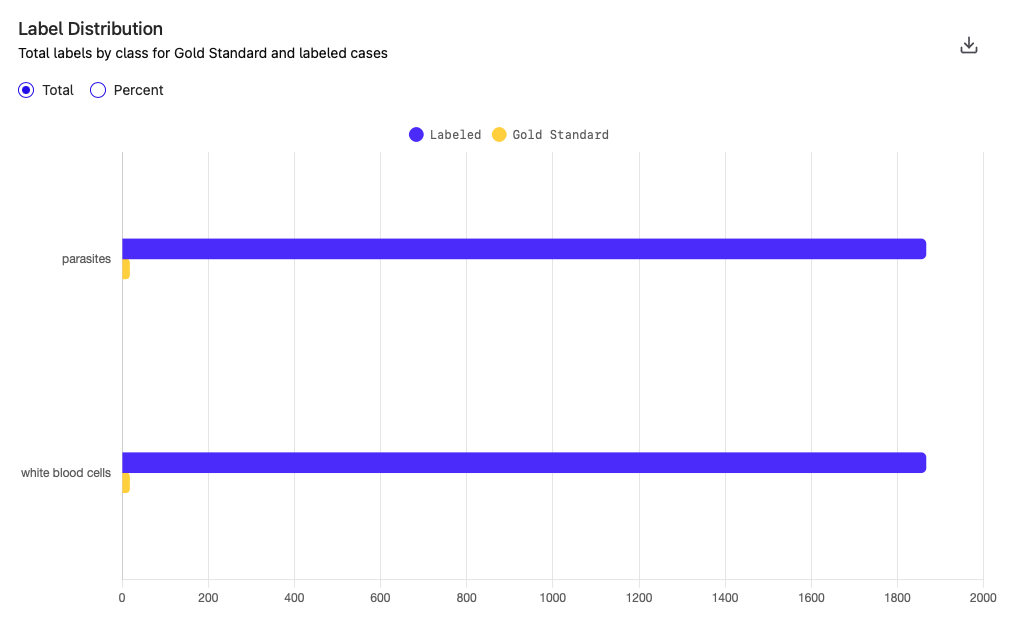

Labeling rate

The labeling rate chart shows how many total cases were labeled by a given day. This chart will only include dates where contests were running, as that’s when cases move to Labeled.

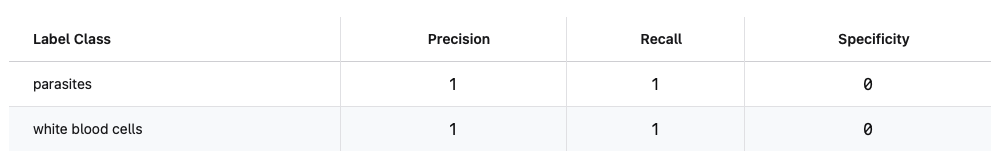

Per-class metrics

The per-class metrics tab shows precision, recall, and specificity values for each of the labeling classes present in a task.

Updated 5 months ago