Boxes, lines, circles

Agreement and difficulty for boxes and line segmentations are based on F1 score. To determine the F1 score, we first need to identify which segmentations that a user has drawn are accurate. To accomplish this, we set a distance threshold to determine which of the user’s individual segmentations we consider accurate in comparison to the Correct or Majority Label. We define a user’s accurate segmentation as one that is within the distance threshold of a given Correct or Majority segmentation.

We then calculate the F1 score for each Top Label based on the accurate segmentations. The Top Labels are the ~5 best reads on each case, based on the labeler’s trailing average accuracy.

- Precision: Number of the user’s accurate segmentations divided by the number of all the user’s segmentations.

- Recall: Number of the user’s accurate segmentations divided by the number of segmentations in the Correct or Majority Label.

- F1: Harmonic mean of precision and recall

Lastly, we use these F1 scores to calculate agreement and difficulty:

- Agreement: average F1 score between each of the Top Labels and the Majority Label

- Difficulty: 1 - average F1 score between each of the Top Labels and the Correct Label

Agreement

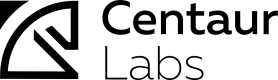

Let’s walk through an example.

Assume the distance threshold is 4 pixels.

This diagram shows a Top Label from a user (noted User Read in the image) and the Majority Label. Here, only two of the individual user segmentations will be considered accurate; the box on the top is too far from the closest unassigned Majority Label segmentation (12 pixels away).

- Precision: There are 2 accurate segmentations, and user drew 3 total segmentations, so the precision is 2/3.

- Recall: Only 2 of the user’s segmentations are accurate compared to the 5 Majority Label segmentations, so the recall is 2/5.

- F1: the harmonic mean of 2/3 and 2/5 is 1/2.

The agreement can then be calculated using the formula stated above based on this Top Labels and the F1 scores of the other Top Labels.

For multi-class segmentation, agreement for each individual class is calculated by the methodology above and the average is taken across all classes to determine agreement of the case.

Difficulty

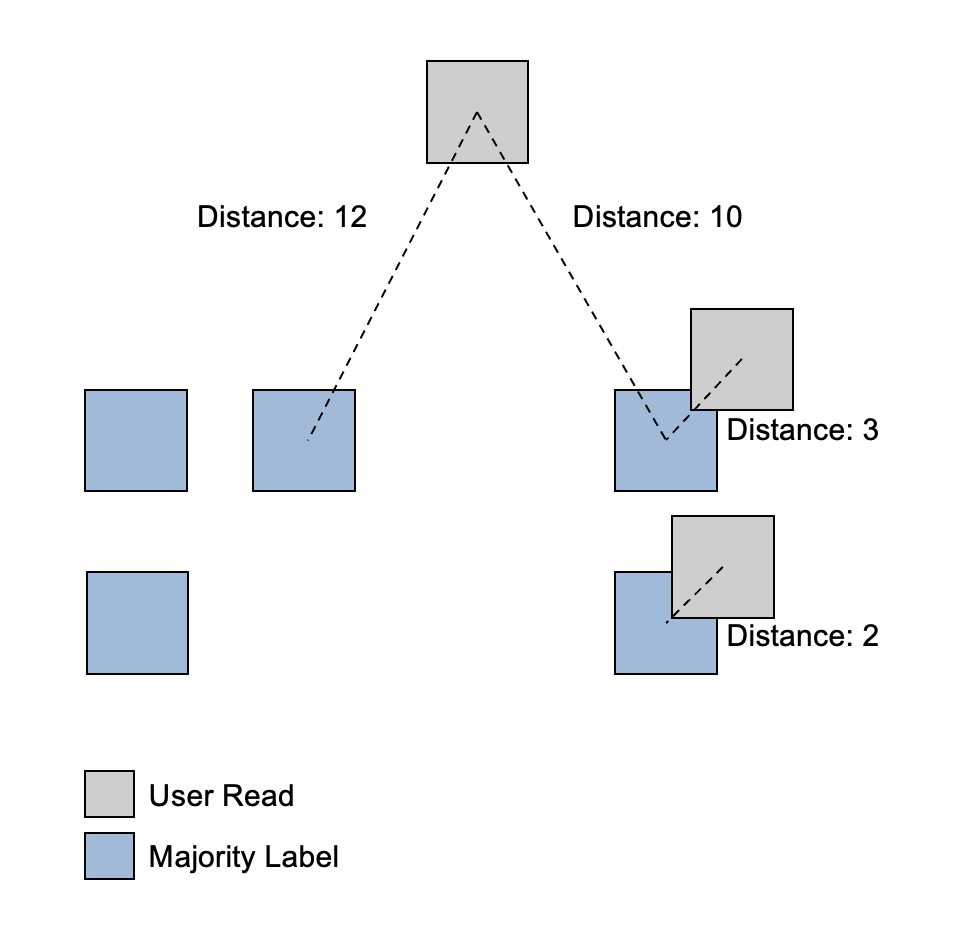

Difficulty is determined using the same precision and recall calculations, just between the individual Top Label user segmentations and the Correct Label instead of the Majority Label.

Looking at this diagram of the Top Label (noted User Read in the image)and the Correct Label, and assuming the distance threshold is still 4, three of the four user segmentations will be considered accurate.

- Precision: There are 3 accurate segmentations, and the user drew 4 segmentations, so the precision is 3/4.

- Recall: 3 of the user’s segmentations are accurate compared to the 4 Correct Label segmentations, so the recall is 3/4.

- F1: the harmonic mean of 3/4 and 3/4 is 3/4.

The difficulty can then be calculated using the formula stated above based on this Top Label and the F1 scores of the other Top Labels.

For multi-class segmentation, disagreement for each individual class is calculated by the methodology above and the average is taken across all classes to determine disagreement of the case.

Updated 5 months ago